This post will highlight the performance improvements in the Sitecore Publishing Service v2; covering the utilization of the hardware resources, the speed of the publishing process, and the mitigation of network latency.

The new Sitecore Publishing Service brings massive performance improvements to customers compared to the old publishing. The publishing component was completely redesigned with the following performance goals in mind:

Efficiency in Consuming Hardware Resources

The more efficient the service is, the cheaper it is to run on the cloud. The new publishing service consumes as little CPU and Memory as possible. A considerable amount of effort has been invested in making sure that the code is efficient; this included multiple iterations of CPU and Memory profiling, re-designing and refactoring the code to make it as efficient as can be. This led to many optimizations such making sure that the service releases any unused references as early as possible. The service is even clever enough to de-reference duplicate string objects stored in each item such as language strings (e.g. “en”), so that only one object is referenced. Such optimizations have massively decreases the memory footprint.

The publishing service has been load tested using a large dataset that included over than 1 million item variants (languages and versions).

The CPU usage was about 17% and the memory usage didn’t exceed 400mb.

Speeding Up the Publishing Process

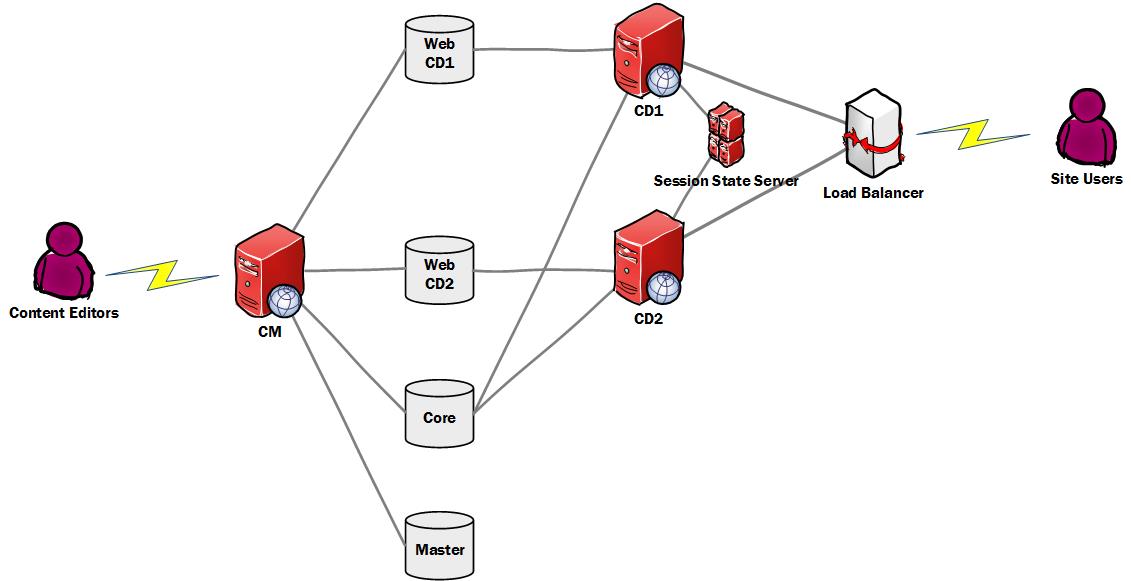

One of the main differences between the new and old publishing is the data layer. Unlike the old publishing, the publishing service doesn’t talk to the databases via the Sitecore item APIs. However, it uses its own data layer, which only performs databases operations in bulks. The bulk operations improve the performance dramatically by mitigating the network latency problems (see the next section).

Another difference is that most of the processing in the publishing service is done in parallel/pipelined approach. For example, while a batch of items is being evaluated for restrictions, another batch is being retrieved from the database at the same time.

The new publishing service also utilizes in-memory indexed trees, which capture the state of the source and target databases at the start of each publish job. Those indexes allow the service to do as much work as possible efficiently (in memory) without talking to the database.

Read More From Hours to Seconds – The Awesome Performance of Sitecore Publishing Service V2

Recent Comments